AI in Next-Gen Defence & Security Systems

Artificial intelligence has moved from the margins of military R&D to the heart of how nations see, decide and act. The contours of power are tilting towards those who can fuse sensors, software and soldiers into a faster, more resilient decision loop. This feature takes a global view of four pillars shaping the battlefield and the home front alike—autonomous surveillance, AI-driven threat detection, predictive cyber defence, and ethics in warfare—while spotlighting the companies setting the pace: Palantir Technologies, Anduril Industries, Helsing, Rafael Advanced Defense Systems, and Thales Group.

The autonomy shift: watchkeepers that never blink

Autonomous surveillance systems are no longer experimental curiosities. They are becoming the quiet constants of modern defence—patrolling borders, guarding critical infrastructure, and feeding commanders with uninterrupted, high-fidelity situational awareness.

Three dynamics stand out:

-

Edge AI everywhere. Sensors—on drones, towers, vehicles and buoys—are now paired with on-board inference so video, radar and acoustic feeds are triaged at the source. Instead of streaming oceans of raw data, systems transmit only what matters: detected objects, tracks, anomalies. This saves bandwidth, shrinks latency and keeps capability alive even in contested environments.

-

From single assets to cooperating swarms. Small, attritable air and ground robots that collaborate are proving more useful than a single exquisite platform. They cover more ground, re-task themselves when one unit fails, and collectively map, classify and track with greater reliability.

-

Human-on-the-loop control. Autonomy is calibrated to the mission. For routine patrols, systems operate independently with guardrails. For sensitive tasks, humans remain close, approving escalations and overrides.

Anduril Industries has been a catalyst in this shift. Its software-defined approach binds sensors, counter-UAS nodes and unmanned systems with a common autonomy and command layer. The value is less in any single drone and more in the lattice that lets many assets “see as one”. For border protection, base defence and maritime domain awareness, this orchestration turns disparate kit into a coherent, self-healing network.

Europe’s answer has arrived through Helsing. Born of a conviction that democratic states need sovereign AI for defence, it focuses on “battlefield AI”: perception, electronic warfare cues and decision support that can be fielded rapidly. Its emphasis on scalable production of unmanned systems—and on software that is hardware-agnostic—gives European forces a route to mass without sacrificing control or values.

Thales Group brings autonomy to legacy strengths. In radars, optronics and sonars, AI now helps distinguish signal from noise in fractions of a second, particularly against low-observable, low-altitude threats. On aircraft and ships alike, that means fewer missed detections and faster classification, especially in cluttered environments where traditional algorithms struggle.

Why it matters: autonomy expands coverage, compresses timelines and reduces risk to personnel. But it also demands new tradecraft: designing missions around probability and confidence scores; setting thresholds for intervention; and training operators to supervise fleets of systems rather than piloting one.

Seeing faster: AI for threat detection and decision advantage

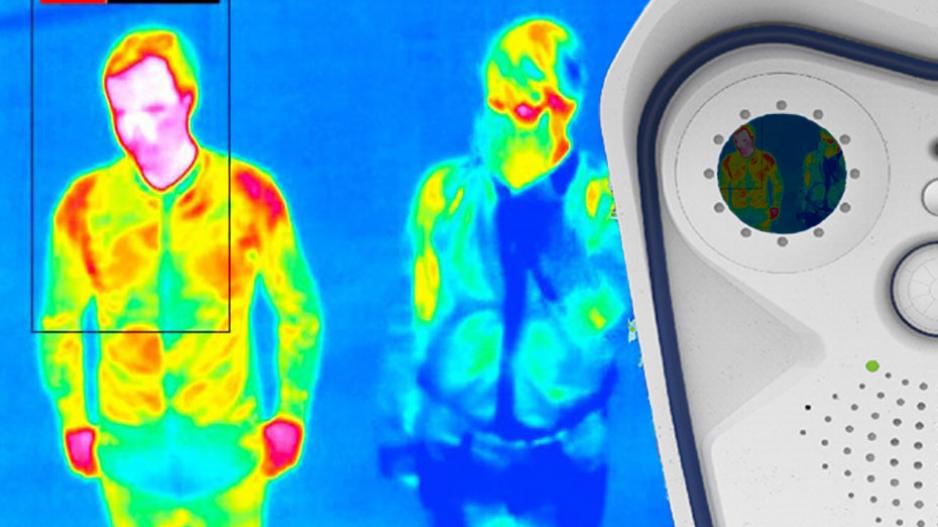

Intelligence, surveillance and reconnaissance (ISR) used to be a marathon of human interpretation. AI has turned it into a sprint. The modern challenge isn’t gathering data—it’s extracting decision-grade insight from it at speed.

Palantir Technologies sits at the nerve centre of this change. Its platforms integrate satellite imagery, signals, HUMINT, logistics and operational feeds into a common operating picture. On top of that picture, model libraries—vision models, pattern-of-life models, language models—flag targets, correlate events and surface recommended courses of action. When a sensor detects a vehicle, for example, Palantir-style workflows link it to recent comms intercepts, known safe houses and terrain analysis to estimate intent and risk—then push options to a commander with provenance intact.

Rafael Advanced Defense Systems embodies AI at the engagement edge. Air and missile defence now hinges on rapid, often automated triage: which tracks are real, which are decoys, which are the most dangerous, what is the best interception stack? AI improves both discrimination and fire control, allowing systems to cope with saturation attacks and more manoeuvrable threats. In precision strike, embedded computer vision sustains lock through smoke, clutter and counter-measures, lightening the operator’s cognitive load.

Thales again illustrates the sensor-to-decision chain. Neural processing close to the sensor accelerates detection; fusion engines reconcile conflicting inputs; explainable classifiers present rationale to the operator. The result is a human who understands why the system is confident, not just what it thinks—vital for trust and accountability.

Across all these cases, the real step-change is tempo. When classification, correlation and deconfliction happen in near-real time, commanders can move from reactive to anticipatory. That reshapes tactics: dispersed units can mass effects without massing forces, and counter-battery fire can launch before the first impact lands.

Predictive cyber defence: fighting wars you cannot see

Every conflict now has a cyber underlay. Power grids, ports, satellites, logistics ERPs and weapon networks are contested on timelines measured in milliseconds. Traditional rule-based security reacts to known signatures. AI-native cyber defence learns what “normal” looks like and hunts for the faint signals of compromise.

Key capabilities maturing in 2025:

-

Behavioural baselining. Unsupervised models profile users, machines and applications, flagging deviations that may signal lateral movement, data exfiltration or command-and-control beacons—even when the malware is novel.

-

Automated triage and response. Classifiers assign severity, map likely kill chains and trigger containment playbooks. Low-risk anomalies are suppressed; high-risk events escalate with context for human decision.

-

Cross-domain correlation. Cyber telemetry is fused with physical sensors and intel feeds. A spike in network anomalies near a radar site during a drone incursion is treated differently from the same anomaly during a training day.

Defence integrators and primes are embedding these approaches into platform lifecycles: secure-by-design firmware; model-based systems engineering that includes red-team AI; and digital twins for cyber range testing before deployment. Palantir contributes by unifying intelligence and SOC data so that threat actors, infrastructure and behaviour can be tracked as living graphs rather than static indicators. Thales, with a deep cyber practice, pushes assurance regimes for AI itself—hardening models against data poisoning, adversarial inputs and model theft.

The upshot: cyber defence is shifting from a game of signatures to a game of inference. Those who do detection, attribution and response in minutes—not days—will keep their critical functions online when it counts.

Ethics and the rules of algorithmic engagement

Power without principle invites peril. The debate over autonomous weapons and AI-enabled targeting has sharpened as the technology has matured. Three guiding themes are emerging among responsible states and suppliers:

-

Human responsibility never abdicates. Whether the system is “in the loop” or “on the loop,” accountability rests with human commanders and developers. That means traceability: data lineage, model versioning, audit trails and post-action explainability.

-

Mission-tailored human control. High-speed air and missile defence may demand autonomous engagement within strict parameters; counter-insurgency and urban operations demand closer human scrutiny. Policies and technical guardrails should reflect that nuance, not a one-size-fits-all doctrine.

-

Lawful by design. Compliance with international humanitarian law—distinction, proportionality, military necessity—must be engineered into requirements. That includes bias testing in training data, robust model validation and conservative fail-safes where ambiguity persists.

Industry leaders are aligning around “trusted AI” as a competitive differentiator. Thales emphasises explainability and operator primacy in its human-machine teaming. Palantir foregrounds governance features: model cards, policy constraints and granular permissions. Helsing positions itself explicitly as an AI partner for democracies, building to European expectations on privacy, oversight and sovereignty. Anduril designs autonomy that can scale, but with clear authorisation layers for the use of force. Rafael embeds doctrine into software so automated prioritisation mirrors command intent, not merely algorithmic efficiency.

This is not window-dressing. Trustworthy AI shortens adoption cycles, eases coalition interoperability and reduces strategic risk. It also reassures publics that technological edge does not come at the expense of human judgment.

Strategy: mass, software and coalition power

The strategic conversation in 2025 is no longer “AI—yes or no?” It is “How do we field AI at scale, safely, across a force and its allies?” Three answers are coalescing.

1) Mass via attritable autonomy. Rather than a handful of exquisite platforms, forces are pursuing affordable, upgradable unmanned systems in large numbers. Anduril and Helsing represent two pathways to that mass: software-first integration across many low-cost assets, and European-made autonomy that aligns with local industrial policy. The centre of gravity is shifting towards orchestrating many things well, not just building one thing perfectly.

2) Software as the decisive layer. Whoever controls the stack—from data pipelines to model ops to mission apps—controls tempo. Palantir’s role in turning heterogeneous data into decisions illustrates why data architecture is now war-fighting infrastructure. Thales and Rafael show how primes are baking AI into sensors and effectors so software upgrades deliver battlefield gains without waiting for new airframes or hulls.

3) Coalition by design. Modern operations are joint by default and multinational by necessity. That demands interoperable data standards, portable models and policy-aware sharing. Suppliers that can deliver “configurable trust”—the right data, to the right ally, at the right classification—will become indispensable.

What good looks like in 2025–2027

Leaders asking “Are we doing AI right?” can look for these markers:

-

Clear problem framing. Start from operational pains—slow targeting cycles, saturated watch floors, brittle cyber posture—not from shiny tools. The best programmes fix a measurable bottleneck within months.

-

Data discipline. Curated, labelled, governed data is the fuel. Invest in pipelines, metadata and access controls before model sprawl sets in.

-

User-centred design. Put operators in the loop of development. The winning tools reduce clicks, surface rationale and fit existing workflows.

-

Continuous testing. Treat AI like a living system. Red-team models, simulate edge cases, monitor drift and adopt a patch cadence more like tech than legacy defence.

-

Ethics baked in. Make assurance a first-class requirement: bias checks, fail-safes, override protocols, and transparent after-action reviews.

Company snapshots: where they’re strongest

-

Palantir Technologies (USA): Data integration, model orchestration and decision support at enterprise scale. Strength in turning heterogeneous intelligence and operational data into actionable options with traceability and governance.

-

Anduril Industries (USA): Autonomy at the edge and system-of-systems integration. Strength in creating resilient defensive networks and swarms that are simple to deploy, upgrade and scale.

-

Helsing (Germany): European sovereign AI—perception, EW-aware analytics and rapid fielding of unmanned systems. Strength in aligning cutting-edge capability with European security, industrial and ethical priorities.

-

Rafael Advanced Defense Systems (Israel): AI-enabled engagement management and smart munitions. Strength in fusing sensors and effectors under pressure against diverse aerial and surface threats.

-

Thales Group (France): Trusted AI across sensors, platforms and cyber. Strength in explainable classification, sensor fusion and human-machine teaming for air, land, sea and space.

The human factor

For all the talk of autonomy, the decisive edge remains human. Analysts who can interpret model outputs with healthy scepticism. Commanders who understand when to trust the machine and when to slow down. Engineers who design for failure, not just success. Policy-makers who set clear boundaries, then empower responsible experimentation.

The most capable forces aren’t those with the flashiest demos—they’re the ones who marry mission clarity with software competence and moral seriousness. They train people to supervise systems, not micromanage them. They iterate fast, but never outrun their own governance. They invite scrutiny, because sunlight hardens systems.

Closing note: urgency without panic

AI will not magically solve strategy, doctrine or deterrence. But it is already transforming the daily grind of defence and security—watch, detect, decide, act. Autonomous surveillance widens the lens. AI-driven detection sharpens the picture. Predictive cyber defence hardens the unseen flank. Ethics and governance keep the enterprise worthy of public trust.

For ministries, commanders and industry partners, the message is pragmatic and hopeful: start where the pain is, scale what works, measure relentlessly, and keep humans in charge. Do that, and AI becomes not a fashionable accessory, but a durable advantage that protects lives and preserves peace.